Abstract

A long-standing goal of AI systems is to perform complex multimodal reasoning like humans. Recently, large language models (LLMs) have made remarkable strides in such multi-step reasoning on the language modality solely by leveraging the chain of thought (CoT) to mimic human thinking. However, the transfer of these advancements to multimodal contexts introduces heightened challenges, including but not limited to the impractical need for labor-intensive annotation and the limitations in terms of flexibility, generalizability, and explainability. To evoke CoT reasoning in multimodality, this work first conducts an in-depth analysis of these challenges posed by multimodality and presents two key insights: “keeping critical thinking” and “letting everyone do their jobs” in multimodal CoT reasoning. Furthermore, this study proposes a novel DDCoT prompting that maintains a critical attitude through negative-space prompting and incorporates multimodality into reasoning by first dividing the reasoning responsibility of LLMs into reasoning and recognition and then integrating the visual recognition capability of visual models into the joint reasoning process. The rationale generated by DDCoT not only improves the reasoning abilities of both large and small language models in zero-shot prompting and fine-tuning learning, significantly outperforming state-of- the-art methods but also exhibits impressive generalizability and explainability.

Method

An overview of our DDCoT and its utilization to improve the multimodal reasoning of LMs. Note that although errors encounter in the second sub-problem during visual recognition, the language model rectifies this error in the joint reasoning step with critical thought

Table below shows the comparison of our DDCoT with the state-of-the-art models on zero-shot and fine-tuning benchmarks. Our approach consistently achieves superior performance compared to previous methods. Note that we further report the results of the recent works which have not been published but have been preprinted in the research community.

Main results (%). Size = backbone model size. GT-R means models are trained with ground truth rationales. Question classes: NAT = natural science, SOC = social science, LAN = language science, TXT = text context, IMG = image context, NO = no context, G1-6 = grades 1-6, G7-12 = grades 7-12. † denotes implementation by removing ground truth rationales when fine-tuning

Visualization

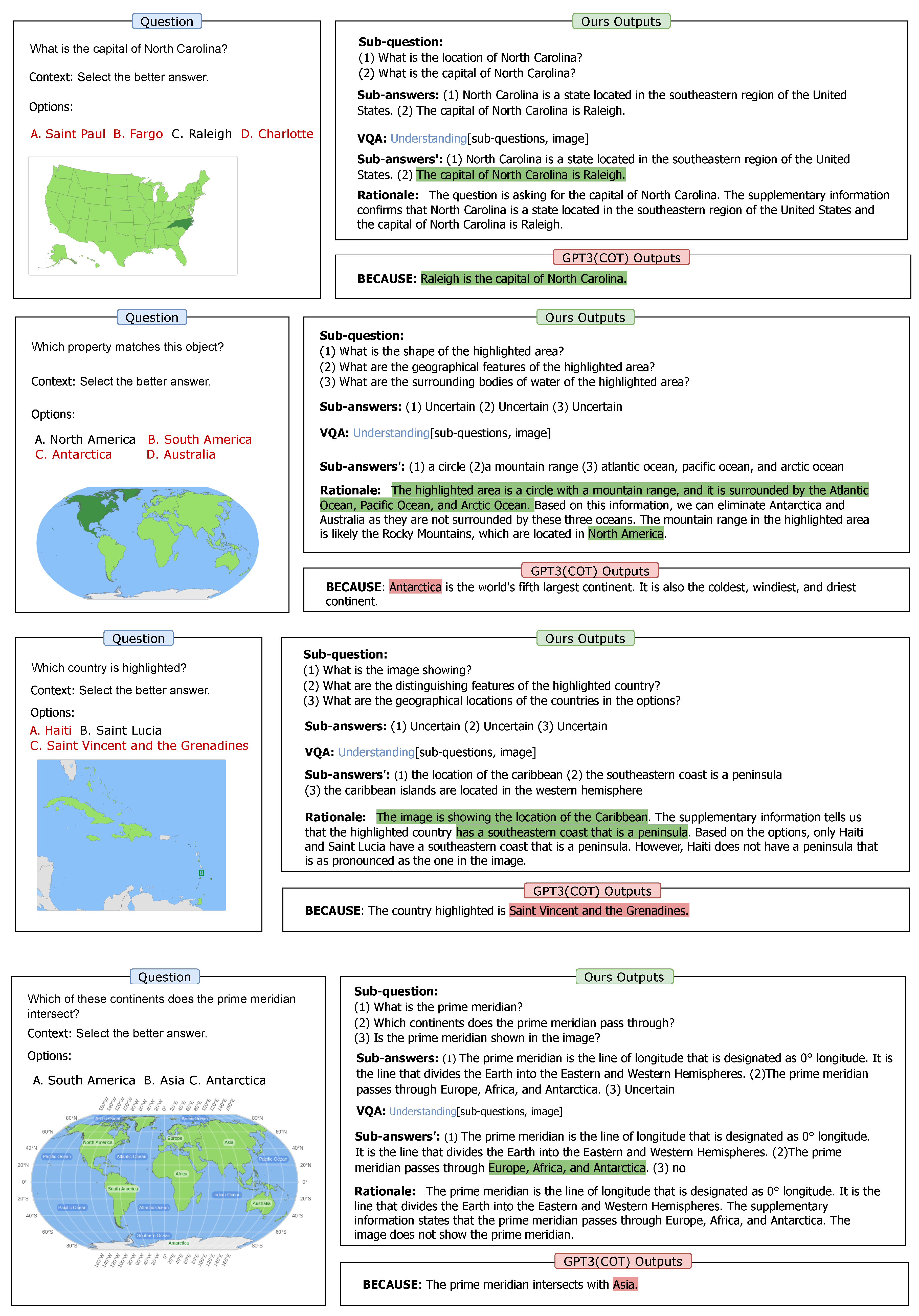

Here we visualize several qualitative results.

Showcases several map-related questions, demonstrating how our method integrates simple visual features (such as the shape of highlighted areas) with common knowledge to obtain correct reasoning and answers.

In the examples presented below, our method successfully identifies within the images, acquiring relevant knowledge

Illustrates four more complex questions, where our method leverages information obtained from the images to perform intricate reasoning. However, when it comes to the complex interaction between images and textual context, our method still fall into hallucinations, leading to erroneous reasoning and answers.