Our insights

Abstract

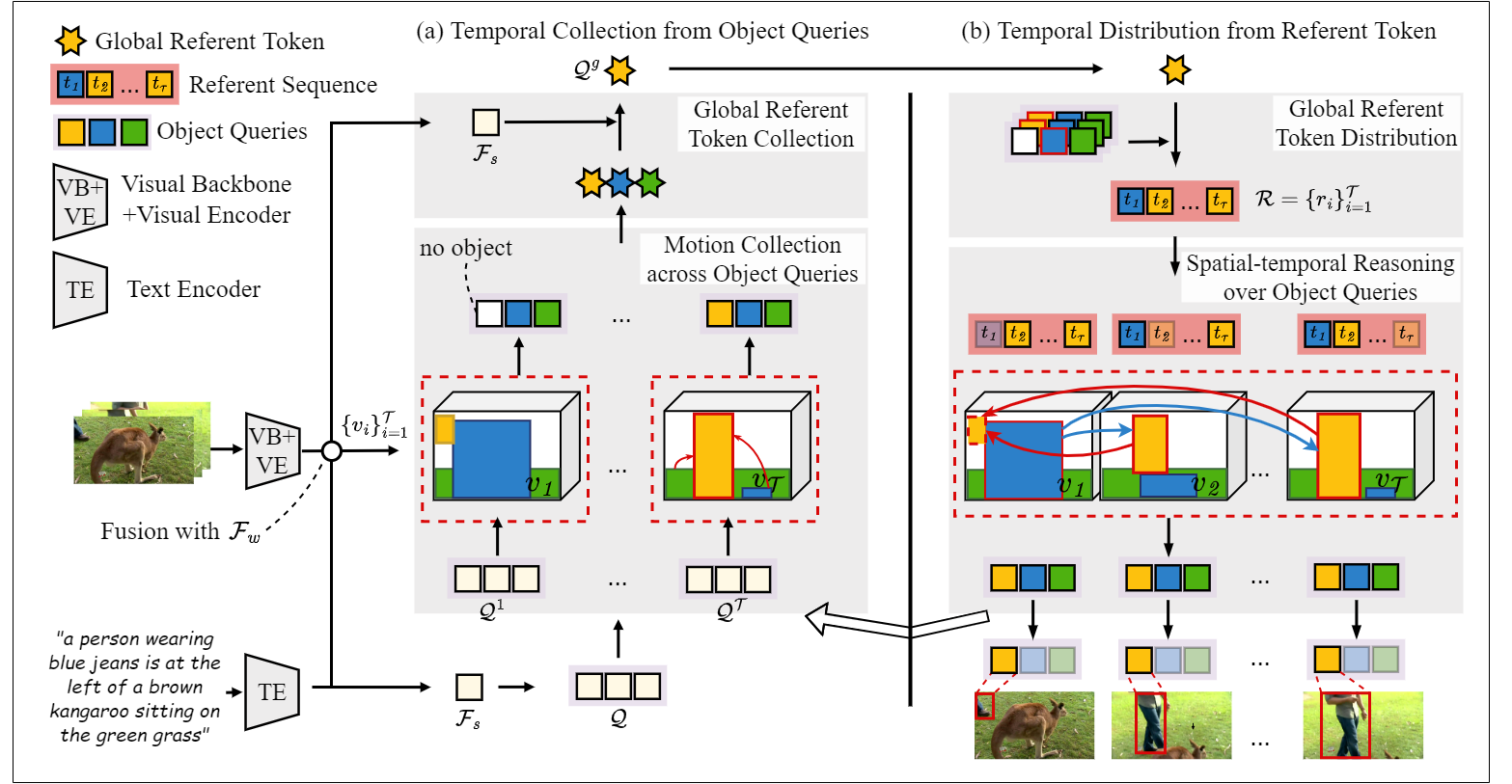

Referring video object segmentation aims to segment a referent throughout a video sequence according to a natural language expression. It requires aligning the natural language expression with the objects’ motions and their dynamic associations at the global video level but segmenting objects at the frame level. To achieve this goal, we propose to simultaneously maintain a global referent token and a sequence of object queries, where the former is responsible for capturing video-level referent according to the language expression, while the latter serves to better locate the temporal collection mechanism collects global information for the referent token from object queries to the temporal motions to the language expression. In turn, the temporal distribution first distributes the referent token to the referent sequence across all frames and then performs efficient cross-frame reasoning between the referent sequence and object queries in every frame. Experimental results show that our method outperforms state-of-the-art methods on all benchmarks consistently and significantly.

Method

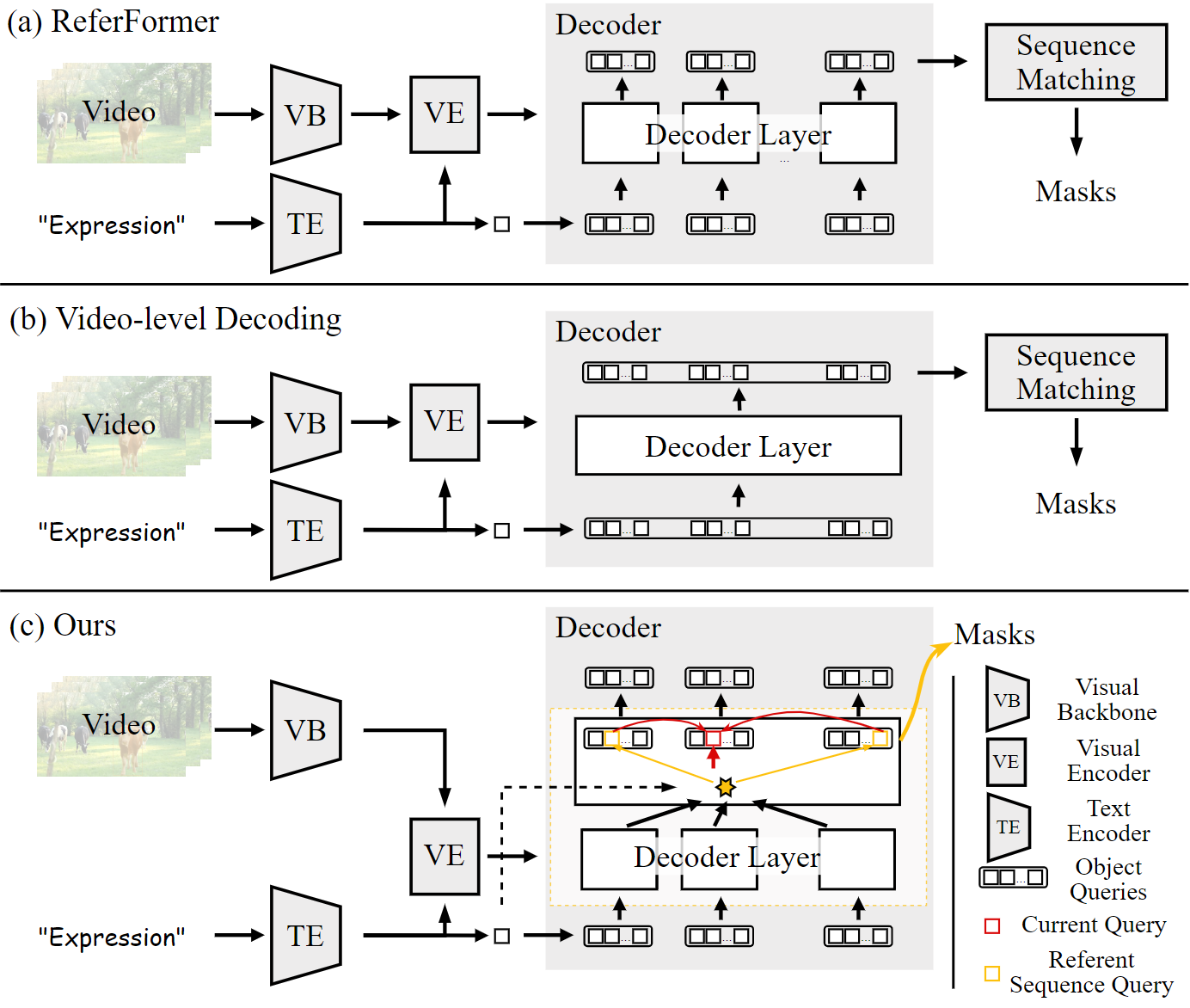

We introduce a sequence of object queries to capture object information in every frame independently and maintain a referent token to capture the global referent information for aligning with language expression. The referent token and object queries are alternately updated to stepwise identify the target object and better segment the target object in every frame through the proposed temporal collection and distribution mechanisms.

Specifically, we first introduce encoders and the definition of object queries. Then, we present the temporal collection that collects the referent information for the referent token from object queries to temporal object motions to the language expression. Next, we introduce the temporal distribution mechanism that distributes the global referent information to the referent sequence across frames to object queries in each frame. The collection-distribution mechanism explicitly captures object motions and spatial-temporal cross-modal reasoning over objects. Finally, we introduce segmentation heads and loss functions. Note that as we explicitly maintain the referent token, we can directly identify the referent object in each frame without requiring for sequence matching as in previous queries-based methods.

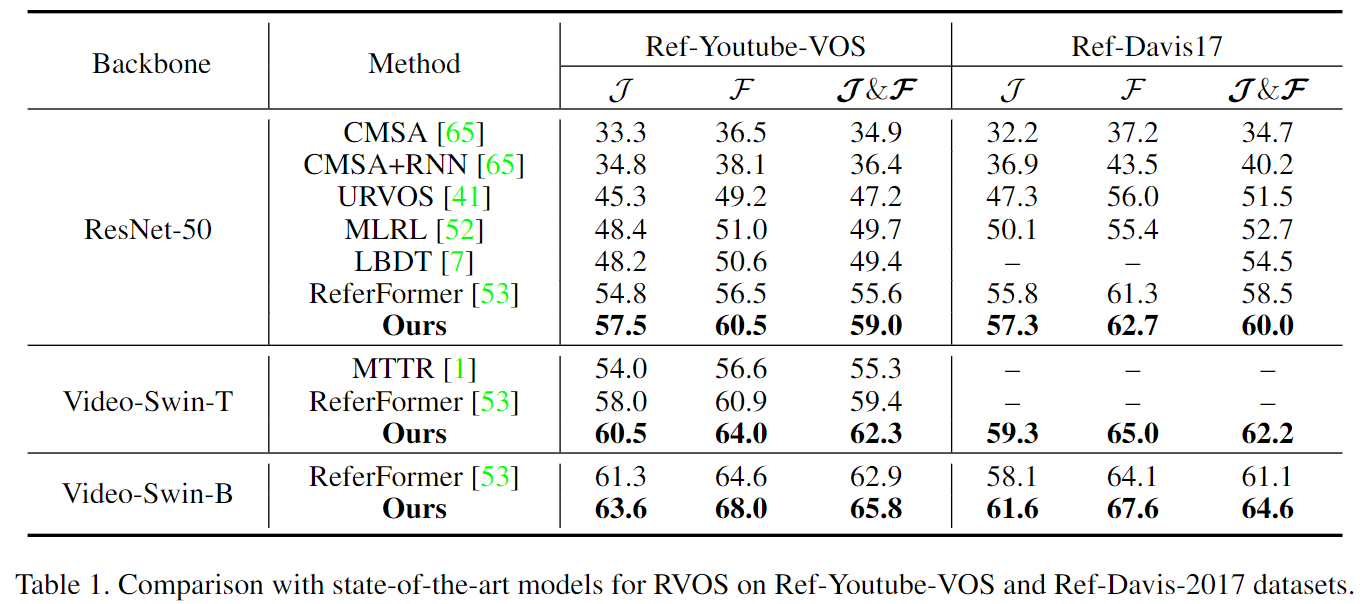

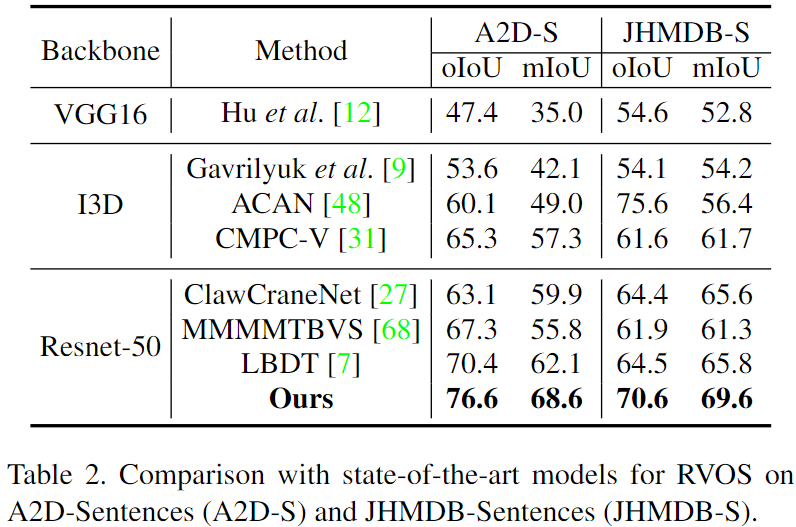

As shown in the tables below, we compare TempCD with state-of-the-art methods on four benchmarks. TempCD consistently outperforms state-of-the-art methods on all datasets.

Main Results

With a standard ResNet-50 visual backbone, our model exhibits improvements of 2.7%, 4%, and 3.4% for the J, F, and J&F metrics, respectively, on the Ref-YoutubeVOS dataset. Employing the advanced temporal visual backbone, Video-Swin-B, our approach consistently outperforms the previous state-of-the-art model on the Ref-Davis-2017 dataset, achieving a 3.5% increase across the aforementioned metrics.

As presented in Table, our approach yields mean improvements of 6.2% in Overall IoU and 5.2% in Mean IoU, surpassing the predominant state-of-the-art method for these two datasets. In contrast to Ref-Youtube-VOS, these datasets predominantly comprise segmentation annotations for keyframes that encapsulate actions.

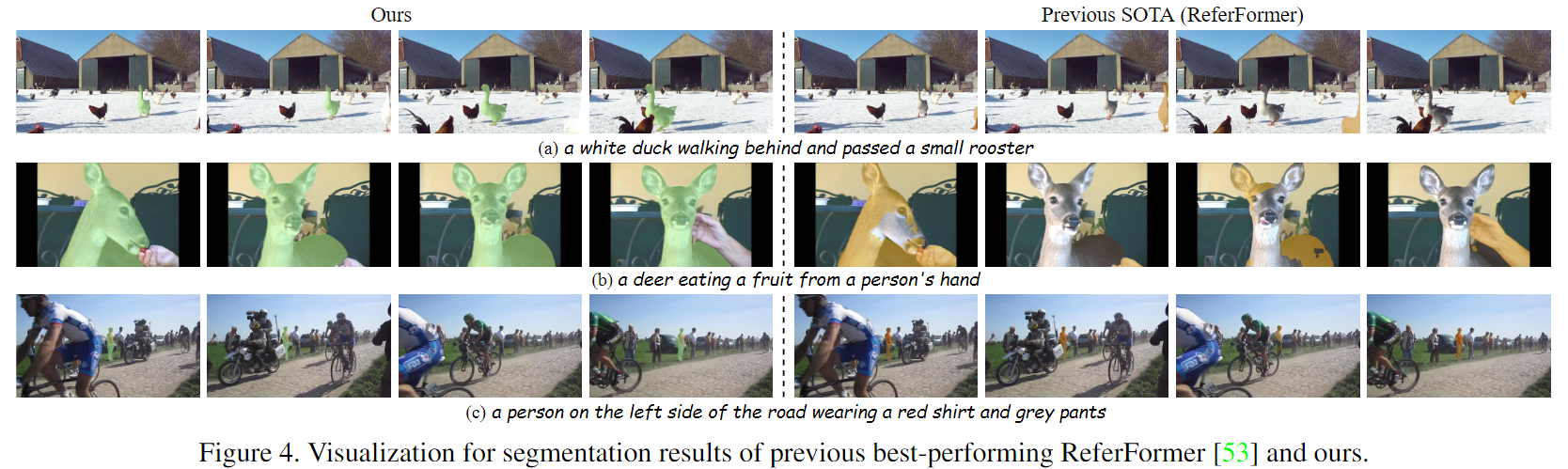

Visualization

Here we visualize several qualitative results. The referring expression in (a) describes the motion of a white duck, a pivotal feature that distinguishes it from similar ducks. Our proposed TempCD effectively captures the motion of the referent which is aligned with the language expression and enables accurate localization and segmentation of the specific duck.

In the instance of (b), the deer's corresponding action appears in certain frames but lacks uniform presence. Our approach successfully captures its global semantics including motions and aligns it seamlessly with the referring expression, bridging the gap between local semantics and the referring expression via the Collection and Distribution mechanisms. All these observations collectively underscore the value of temporal interaction in fostering refined segmentation across frames. Besides, (c) illustrates that our method can still precisely locate and segment referent objects in complex scenes. The second frame presents a scenario of occlusion, wherein the referent is obscured by another bicycle. Our approach detects the lack of correspondence between visible entities in a frame and the specified referent sequence, facilitated by cross-frame interactions between the visible objects and those delineated by the referent sequence.